I recently undertook a journey to transfer my DNS hosting from Namecheap to Amazon’s Route53 service. Doing so wasn’t as straight-forward as I would have hoped, and so I am writing this post to go over some of the challenges I had and what I learned in the process.

First off: I don’t think I will ever really understand DNS fully. Every time I think I’ve got a solid handle on the basics, I learn something new about what should have been DNS fundamentals and it forces me to entirely rethink the flow of how I thought things were working.

As part of the DNS lessons, I was forced to look into getting a mailserver set up for my domain since I could no longer use Namecheap’s email forwarding service without using their DNS plans. I am currently using a $10/month hosted email server ($2/month per mailbox, but required 5 mailboxes with Rackspace’s basic email plan), but I am sure one could just as easily be set up on my home server or on an AWS EC2 instance. But, since I needed email now in order to verify my domain ownership with Route53 (they send an email to verify the domain, but I had already opted out of Namecheap’s DNS services and lost the email forwarding), I chose the simple option and got some basic email hosting so I could have admin receive email at my domain.

In the very near future I will be creating a new email server for myself - I began Hurricane Electric’s IPv6 Certification to learn a thing or two about DNS and IPv6. Part of this involves having an email server resolve a AAAA record for your domain, something Rackspace’s email did not provide - at least at the tier I subscribed to. So… why not take things into my own hands?

Why Route53?

So the first question anyone would probably ask me is “Why Route53?”. Lots of answers - some techical, but mostly personal - I work with AWS professionally and it’s comfortable enough at this point where I feel at home when learning about foreign concepts… Like DNS… So full disclosure up front - there was no “good” reason. I wanted to see if I could, and since I am hosting a lot in Amazon as it is, I didn’t mind locking myself to the AWS ecosystem.

The bullet points of the “why” are:

- I chose/was already using AWS components that are tightly integrated with Route53

- tied together with Alias Resource Record Sets (see below) - work with s3, cloudfront, elastic load balancers, or other route53 endpoints

- Route53 is cheap

- $0.50 for my hosted zone, and the queries for my resources are actually free (Alias Resource Record Sets are awesome)

- Familiar API for making changes + many well-supported SDKs

- translates into vast support network + lots of automation opportunities (my favorite)

- Easy to create subdomains/additional hosted zones (again, automated)

I also have recently decided to take upspin for a spin as well as began to start hosting things myself (like an email server). The static website was easy enough to manage, but Upspin requires A records on the URL, meaning that if my upspin server ever goes down, updating the DNS will potentially be required. At that point, I would be at the mercy of all the TTLs (Time-To-Live; amount of time a DNS result is cached) at the various locations DNS gets cached. This just wouldn’t do for me. Even with a 300s TTL (5 minutes), many DNS servers can (and will) ignore the TTL set on the record and cache it for however long it wants. With all the uncertainties in DNS, I wanted something that was a bit more dynamic and predictable.

Above all else, I tend to have a goal of automated everything. Setting the DNS on Namecheap wasn’t automatic, and while I definitely did not even look to see if there was an API involved (sorry if there is!), it didn’t feel worth it for me to try and re-invent the wheel and build out some extra orchestration layer when with my current stack, I knew I would only need to add a few lines of code to get a Route53 resource created in my CloudFormation template.

The end-to-end automation opportunities for having a large infrastructure stack - web servers, email servers, and DNS - all in one place was just too tempting to pass up.

Power of Route53’s Alias Resource Record Sets (and a little about DNS)

Okay so I have been hinting at Route53’s awesomeness like I’m some Amazon Web Services shill (I assure you, I am not), and so I will continue to do just that as I explain some of my favorite features/capabilities provided by Route53. Skip down to the next sections if you just want to know how I built everything.

Route53’s magic (in my mind) comes from two main things:

- Providing DNS resolutions for any alias resource record sets you set up - alias resource record sets are exclusive to Route53 and can only target specific AWS services within your account.

- Layered health-checks and weighted routing options - you can set up multiple cascading Route53 entries to handle Blue/Green deployments, automatic failovers, or even a poor man’s load balancer (with some help from a lot of automation/integration with other AWS services)

The big draw for my use-case was the ability to assign an alias resource record set to an elastic load balancer for the root domain. Depending on how strict DNS providers are with RFCs, possible for me without Route53 without changing my infrastructure hosting provider (to Goog

For background - in a typical DNS setup, the root domain/zone apex (the “raw” domain - andrewalexander.io in my case) must be set up with an A Record (or AAAA with IPv6)**. By definition, A/AAAA Records can only be IP addresses. But, when an application is sitting behind a reverse proxy or load balancer, the IP addresses of both the load balancer and the underlying servers can (and do) change relatively often.

** Full disclosure - some DNS providers (like Namecheap’s Basic DNS) do let you use a CNAME for the zone apex, but you will lose the ability to establish some kinds of DNS records. This may not matter, but as mentioned before I had been going through the IPv6 certification and a step towards the end of that is setting up Glue A Records. This would have been impossible without an A record on the zone apex.

Now, CNAMEs are typically used to address this problem of “hot potato” with IP addresses. A CNAME is used when you want to have one domain point to another; this is commonly done with load balancers behind subdomains (something.andrewalexander.io). Let’s say we have a load balancer with the DNS name of load-balancer.123longhashvalue.provider-url.life. A CNAME is set up that points mail.andrewalexander.io to load-balancer.123longhashvalue.provider-url.life. When a user then goes to that URL, their DNS query will return the load-balancer.123longhashvalue.provider-url.life hostname, which will subsequently be queried recursively until it finds the resulting A (or AAAA) record corresponding to the server with the actual content on it. The final returned IP address will get cached as normal with DNS, and any updates to the IP addresses behind the scenes will eventually get propagated out.

The important point to note in that example is that as the IP addresses change, there is no requirement to manually change any DNS entries/configurations. This makes for a happy application owner/network administrator (in DevOps world, “you build it, you own it”?). So… since I always tend to assume the worst in failure modes, I began designing a solution involving the quickest failover while being as close to fully automated as possible. In later posts, I will describe my mail server journey - automating failover and recovery of my email server hosted on EC2, orchestrated with Lambda and Autoscaling Group Lifecycle hooks.

Oh, and DNS queries against Alias Resource Record Sets pointing to S3 websites, load balancers, CloudFront distributions, or Elastic Beanstalk environments are FREE! Any of the other usual suspects in DNS, however, are charged a rate per millions of queries.

Original hybrid DNS setup

For my static site (this one), I originally just had a CNAME pointing from andrewalexander.io to the static S3 URL: http://andrewalexander.io.s3-website-us-east-1.amazonaws.com/. As mentioned above, Namecheap did offer the ability to redirect my naked domain.

Quick aside about static sites on S3: For starters - static websites on S3 have odd DNS resolution. No matter what resolves in between, the top level hostname must match the bucket name exactly. So I could theoretically have many different CNAMEs/redirects between andrewalexander.io and the actual s3 website URL that returns the content - when it eventually gets to the s3 URL, it parses out the bucket name and does some kind of magical routing I won’t even pretend to begin to understand. Something about that still feels odd.

Magic aside, the site was redirecting properly at this point, but I didn’t like that it was HTTP-only. As it turns out, you can’t get HTTPS on a static S3 website without introducing yet another Amazon service - CloudFront, Amazon’s content delivery network.

It was at this point that I introduced Route53. I wasn’t sure if I was going to like Cloudfront or not. There was a fun issue around invalidating objects that I have a separate blurb about down below that I got to work through, but all in all, it turned out to be great for what I needed.

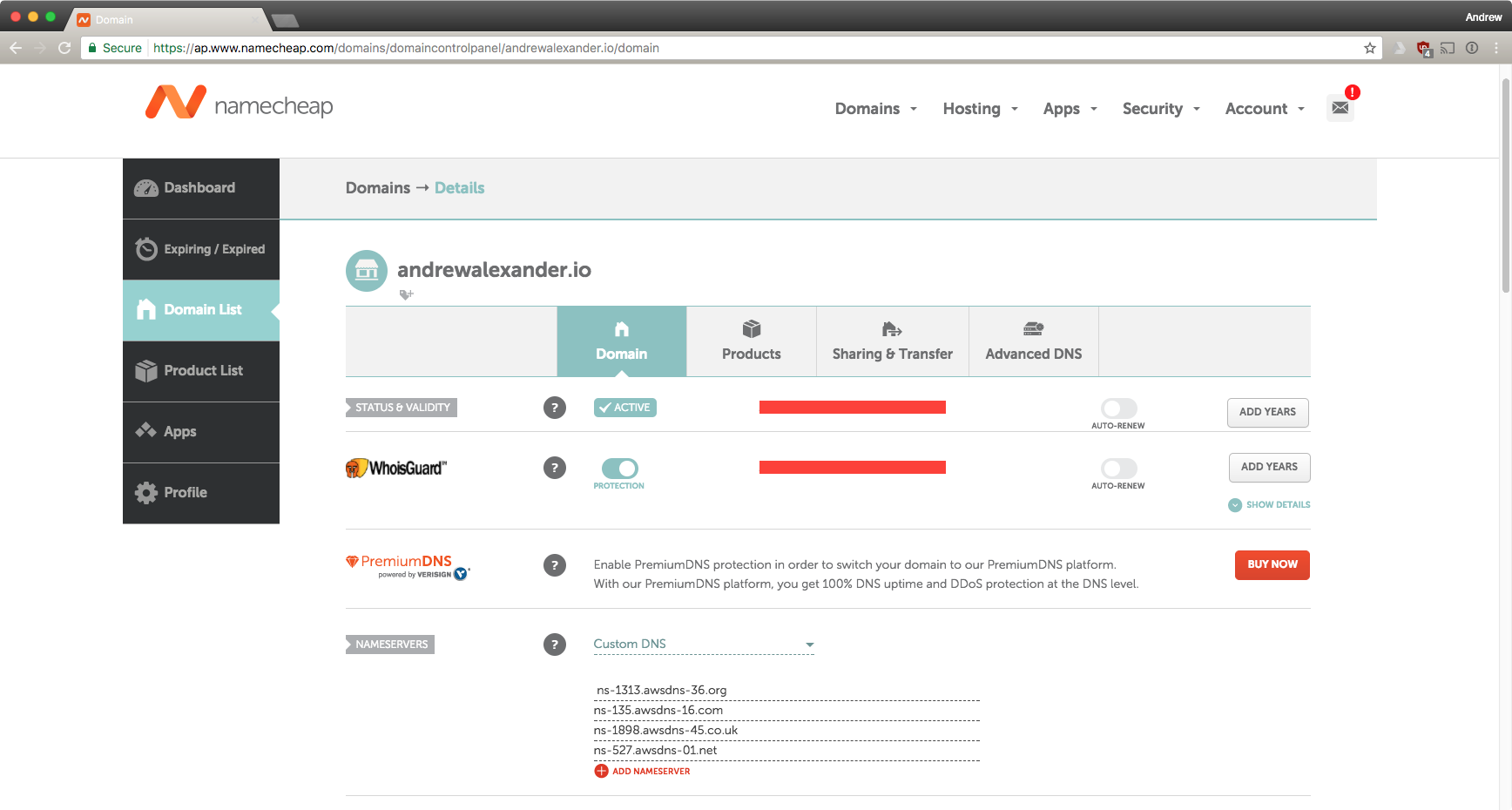

Original Namecheap DNS Settings

- Created the

andrewalexander.ioHosted Zone in Route53- This generates an SOA and 4 NS records automatically

- Created an

A Recordin Namecheap’s Basic DNS panel to point to the upsin server’s IP address - Created a

www(CNAME) in Namecheap’s Basic DNS panel to point to Route53

The issue arose around the SOA and NS records generated automatically for me. When Route53 creates these for you, you are not supposed to touch them… The SOA that Route53 gave was claiming to be the “Start of Authority” for andrewalexander.io, but Namecheap had certainly already established SOA by the time it got to Route53. I didn’t think anything of this, but there were some weird caching behaviors that started to happen, and I was getting wildly unpredicatable results trying to resolve my domain over the couple days that followed. I didn’t think to capture any data until well after I resolved the issue (pun intended), but only after moving the DNS over did I realize that there were duplicate SOA records. Maybe somebody else can explain what was really going on - all I know is I was getting inconsistent results from a dig any +trace +all andrewalexander.io.

Once I noticed the duplicate SOA records, I figured I should just put all my DNS eggs in one basket with Route53, and that’s what I did… But not before trying a couple of things.

I removed the andrewalexander.io hosted zone from Route53 and created upspin.andrewalexander.io instead - different SOA for a different subdomain. I just made the www/CNAME in Namecheap to point to Route53 and everything seemed okay after a few minutes when my DNS was returning the new name server information. At this point, my automation bug had really kicked in and I lost the patience to let it see if the problem was really fixed for good - I jumped into Route53 all the way.

Setting up DNS with Route53

The first thing I should have done was set up Route53 and AWS Certificate Manager so that I could easily get an SSLcertificate with CloudFront. Doing so requires you to prove you own your domain (seems logical…). To do so, they send an email to a few of the usual suspects admin, webmaster, postmaster etc. all @andrewalexander.io. Well, since I didn’t do this before blindly selecting and accepting the “Custom DNS” (with no name servers entered at the time), I didn’t realize that I was removing my email forwarding I had had set up with Namecheap’s Basic DNS plan. Ouch. So this is where I signed up for the Rackspace email - I knew I was going to need to receive mail at this address once the migration to Route53 was done.

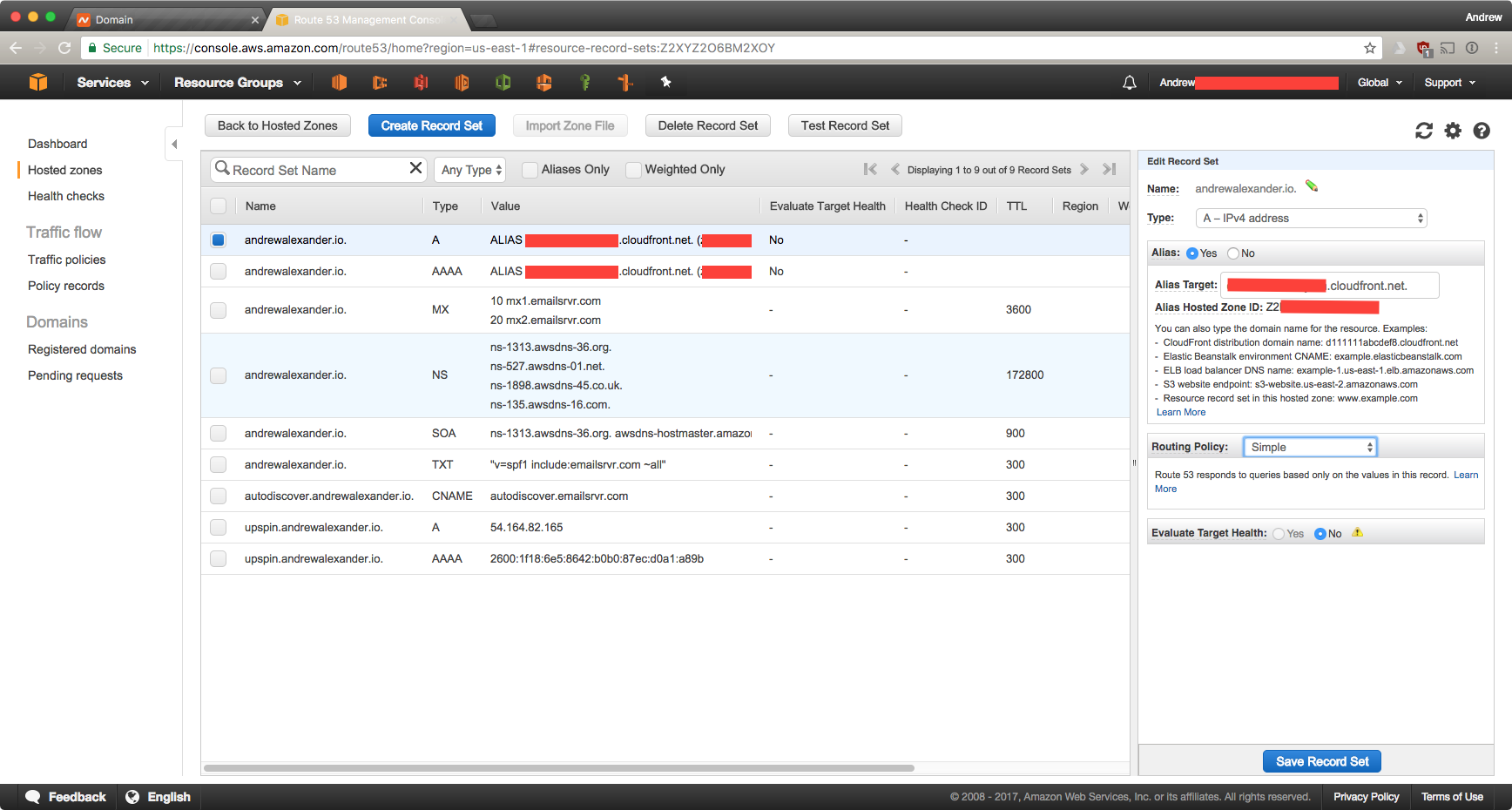

Quite interestingly, Route53 doesn’t seem to check at all about domain ownership for any hosted zone you create. Perhaps this is why things were failing earlier for me? DNS’ SOAs are doing the correct thing? Not sure if I’m just mis-remembering, but in writing this I definitely just created a “google.com” hosted zone without any issues. And yet, no DNS queries are returning my entries - part of the magic of DNS ;). Either way, once the hosted zone was created, I needed to update Namecheap’s DNS settings with the new nameservers for my domain:

Fortunately for me, that little hosted zone [lack of] verification quirk let me create MX records in Route53 that actually resolved and pointed to the Rackspace mail servers. This let me create my SSL certificate and secure the website you’re now on.

Once the nameserver information propagated, actually adding the rest of the records into Route53 was as straightforward as you would hope. The Cloudfront distribution is listed as an ALIAS, which - if you look at the output of a dig query - does indeed have multiple A and AAAA records (redundancy; the ipv6 ones were misleading - look at the 4th hextet).

$ dig andrewalexander.io aaaa +short

2600:9000:203c:7400:1d:f242:3580:93a1

2600:9000:203c:4c00:1d:f242:3580:93a1

2600:9000:203c:6800:1d:f242:3580:93a1

2600:9000:203c:ca00:1d:f242:3580:93a1

2600:9000:203c:f400:1d:f242:3580:93a1

2600:9000:203c:1a00:1d:f242:3580:93a1

2600:9000:203c:5a00:1d:f242:3580:93a1

2600:9000:203c:3200:1d:f242:3580:93a1

$ dig andrewalexander.io a +short

52.84.127.122

52.84.127.65

52.84.127.59

52.84.127.190

52.84.127.175

52.84.127.249

52.84.127.180

52.84.127.166

Cloudfront woes

So… Cloudfront is a content delivery network. The goal of any CDN is to cache content on as many edge nodes as possible for better access times for clients requesting the content. By default, it has cache times for individual objects of around 24 hours. With a static website naively hosted in S3 and fronted with CloudFront, any updates to a file (say… index.html) are not felt until all the cached versions of the file expire or are invalidated. Invalidating too many files can incur a charge (though it’s a very high number for something simple like this website), but it stilled to me needing a solution to update content quickly.

As Amazon themselves point out, the best way to handle this problem is to use versioning in your file names. I knew that was going to take me a bit longer to do and I wanted something now so the first naive attempt was a simple bash script to update my site. It runs hugo (generating the static content and placing them in a public/ directory), invalidates everything in the cloudfront distribution, and then uploads the fresh files to s3, marking these new files as the latest to propagate out in the distribution. That begins the replication process to the edge nodes.

#!/bin/bash

hugo

aws cloudfront create-invalidation --distribution-id E20XXXXXXXXXX1 --paths "/*"

aws s3 sync public s3://andrewalexander.io